Abstract from Some of My Papers (2/6)

Abstract from Some of My Papers (2/6)

|

|

|

Keywords: Rearrangeable, fabrication, pixel art, refracted light, mixed integer problem

|

|

|

|

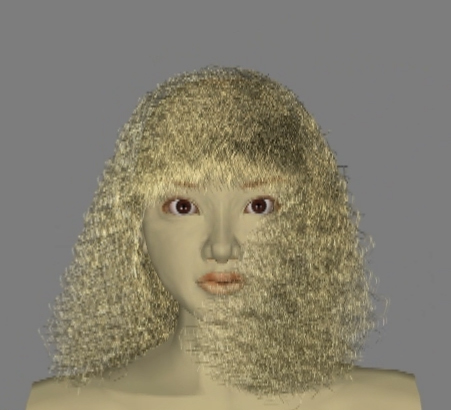

Keywords: hair simulation, shape matching;GPU, individual hair strand

|

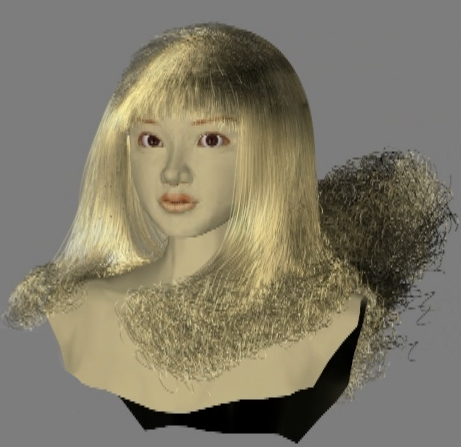

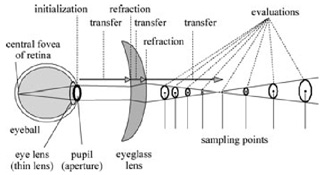

Keywords: progressive lenswavefront tracing, conoid tracing;defocus, depth of field

|

|

|

|

|

|

|

|

|

|

|

==>

==>

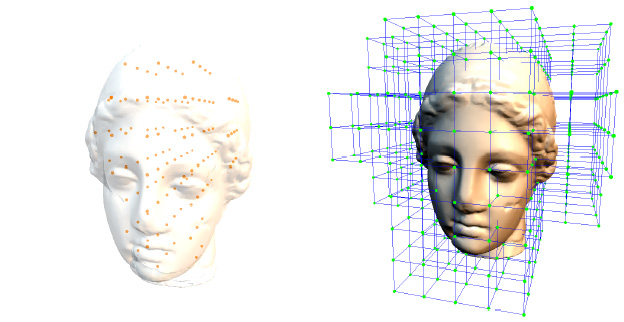

input (hand-drawn) ----> output (3D model) |

|

|

|

|

|

|

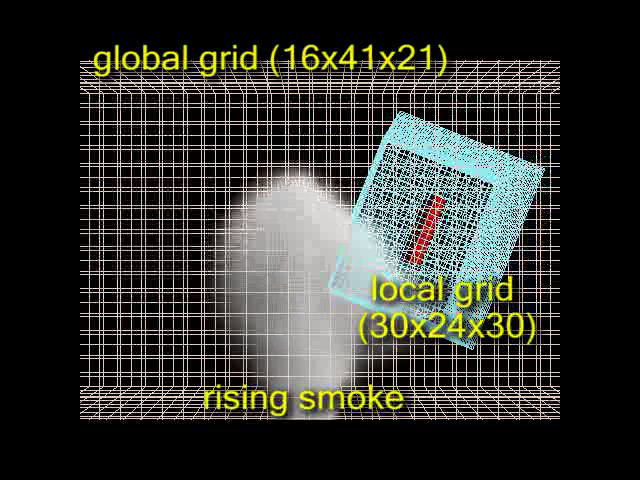

The display of natural scenes such as mountains, trees, the earth as viewed from space,

the sea, and waves have been attempted. Here a method to realistically display snow is proposed.

In order to achieve this, two important elements have to be considered, namely the shape

and shading model of snow, based on the physical phenomenon. In this paper, a method for

displaying snow fallen onto objects, including curved surfaces and snow scattered by

objects, such as skis, is proposed. Snow should be treated as particles with a density

distribution since it consists of water particles, ice particles, and air molecules.

In order to express the material property of snow, the phase functions of the particles

must be taken into account, and it is well-known that the color of snow is white because

of the multiple scattering of light.

This paper describes a calculation method for light scattering due to snow particles

taking into account both multiple scattering and sky light, and the modeling of snow.

"A Modeling and Rendering Method for Snow by Using Metaballs"

by Tomoyuki Nishita, Iwasaki, Yoshinori Dobashi, and Eihachiro Nakamae

Abstract

Key words

snow, multiple scattering, Mie scattering, metaball, volume rendering

Additional information

pdf-file(bad quality)

(10.8MB)

pdf-file(bad quality)

(10.8MB)

"Method for Calculation of Sky Light Luminance Aiming at an Interactive

Architectural Design"

by Yoshinori Dobashi, Kazufumi Kaneda, Hideo Yamashita, and Tomoyuki Nishita

Abstract

Recently, computer graphics are frequently used for both architectural design

and visual environmental assessment. Using computer graphics, designers can easily compare the effect of the natural light on their architectural designs under various conditions, such as different times of day, seasons, atmospheric conditions (clear or overcast sky) or building wall materials. In traditional methods of calculating the luminance due to sky light, however, all calculation must be performed from scratch if such conditions undergo change. Therefore, to compare

the architectural designs under different conditions, a great deal of time has

to be spent on generating the images.

This paper proposes a new method of quickly generating images of an outdoor

scee, taking into account glossy specular reflection, even if such conditions change.

In this method, luminance due to sky light is expressed by a series of basi

s functions, and basis luminances corresponding to each basis function are

precalculated and stored in a compressed form in the preprocess.

Once the basis luminances are calculated, the luminance due to sky light can be quickly calculated

by the weighted sum of the basis luminances.

Several examples of an architectural design demonstrate the usefulness of the proposed method.

Key Words:

snow, multiple scattering, Mie scattering, metaball, volume rendering

Additional information

pdf-file(mono color) (11.5MB)

pdf-file(mono color) (11.5MB)

"A Quick Rendering Method using Basis Functions for Interactive Lighting

Design"

by Yoshinori Dobashi, Kazufumi Kaneda, Takanobu Nakashima, Hideo Yamashita, and

Tomoyuki Nishita

Abstract

When designing interior lighting effects, it is desirable to compare a

variety of lighting designs involving different

lighting devices and directions of light. It is, however, time-consuming

to generate images with many different

lighting parameters, taking interreflection into account, because all

luminances must be calculated and recalculated.

This makes it difficult to design lighting effects interactively. To

address this problem, this paper proposes a method

of quickly generating images of a given scene illustrating an

interreflective environment illuminated by sources with

arbitrary luminous intensity distributions. In the proposed method, the

luminous intensity ditribution is expressed

with basis functions. The proposed method uses a series of spherical

harmonic functions as basis functions, and

calculates in advance each intensity on surfaces lit by the light sources

whose luminous intensity distribution are the

same as the spherical harmonic functions. The proposed method makes it

possible to generate images so quickly that

we can change the luminous intensity distribution interactively.

Combining the proposed method with an interactive walk-through that employs

intensity mapping, an interactive

system for lighting design is implemented. The usefulness of the proposed

method is demonstrated by its application

to interactive lighting design, where many images are generated by altering

lighting devices and/or direction of light.

Key Words:

Additional information

pdf-file(mono color) (13.5MB)

pdf-file(mono color) (13.5MB)

"A Method for Displaying Metaballs by using Bezier Clipping"

by Tomoyuki Nishita, and Eihachiro Nakamae

Abstract

For rendering curved surfaces, one of the most popular techniques is

metaballs, an implicit model based on isosurfaces of potential

fields.

This technique is suitable for deformable objects and CSG model.

For rendering metaballs, intersection tests between rays and

isosurfaces are required.

By defining the higher degree of functions for the field functions,

richer capability can be expected, i.e., the smoother surfaces.

However, one of the problems is that the intersection between the ray

and isosurfaces can not be solved

analytically for such a high degree function.

Even though the field function is expressed by degree six polynomial

in this paper (that means the degree six equation should be solved for

the intersection test),

in our algorithm, expressing the field function on the ray by

Bezier functions and employing Bezier Clipping, the

root of this function can be solved very effectively and precisely.

This paper also discusses a deformed distribution function such as

ellipsoids and a method displaying transparent objects such as

clouds.

Key Words:

Metaballs, Blobs, Soft objects, Density function, Ray tracing,

Bezier Clipping, Deformable objects, Geometric Modeling, Photo-realism

Additional information

pdf-file(bad quality)

(10.6MB)

pdf-file(bad quality)

(10.6MB)

"Skylight for Interior Design,"

by Yoshinori Dobashi, Kazufumi Kaneda, Eihachiro Nakashima, Hideo Yamashita,

Tomoyuki Nishita, and K.Tadamura,

Abstract

It is inevitable for indoor lighting design to render a room lit by

natural light,

especially for an atelier or an indoor pool where there are many

windows. This paper

proposes a method for calculating the illuminance due to natural light,

i.e. direct sunlight

and skylight, passing through transparent planes such as window glass.

The proposed method

makes it possible to efficiently calculate such illuminance accurately,

because it takes

into account both non-uniform luminous intensity distribution of

skylight and the

distribution of transparency of glass according to incident angles of

light. Several

examples including the lighting design in an indoor pool, are shown to

demonstrate the usefulness of proposed method.

Additional information

pdf-file(mono color) (11.9MB)

pdf-file(mono color) (11.9MB)

"A New Radiosity Approach Using Area Sampling for Parametric Patches"

by Tomoyuki Nishita, Eihachiro Nakamae

Abstract

A high precision illumination model is indispensable for lighting

simulation and realistic image synthesis.

For the purpose of improving realism, research on global illumination

has been done, and several papers on radiosity methods have been presented.

In the most recently proposed methods, the shapes of light sources

and objects are restricted to polygons or simple curved surfaces.

We present a more general method which can handle the kind of

free-form surfaces widely used in industrial products and in architecture.

The method proposed here solves the problem of the interreflection of

light (i.e., radiosities) between patches, and

form-factors, which play an important role in this process,

are precisely calculated without aliasing through the use of an

area sampling method (i.e., pyramid tracing).

Furthermore the method can handle both non-uniform intensity curved

sources and non-diffuse surfaces.

Key Words:

Radiosity, Interreflection of light, Form-factor,

Bezier Surfaces, Scan line algorithm, Shadows, Penumbra

Additional information

pdf-file(bad quality)

(16MB)

pdf-file(bad quality)

(16MB)

"Modeling of Skylight and Rendering of outdoor Scenes,"

by K.Tadamura, E.Nakamae, K.Kaneda, M.Baba, H.Yamashita, T.Nishita,

Abstract

Photorealistic animated images are extremely effective for

pre-evaluating visual impact of

city renewal and construction of tall buildings. In order to generate a

photorealistic

image not only the direct sunlight but also skylight must be considered.

This paper proposes a method of high-fidelity image generation for

photorealistic

outdoor scenes based on the following ideas:

(1) The intensity distribution of skylight taking account of scattering

and absorption

due to particles in the atmosphere which coincides with CIE standard

skylight luminance

functions is sought, and realistic images considering about spectrum

distribution of

skylight for any altitude of the sun can be easily and accurately

displayed.

(2) A rectangular parallelepiped with a specialized distribution of

intensity simulating

the skylight is introduced for efficient calculation of illumination due

to skylight,

and by employing a graphics hardware calculation of the skylight

illuminance taking into

account shadow effects is obtained with high efficiency; these

techniques can be used to

generate sequences of images, making animations possible at far lower

calculation cost

than previous methods.

Additional information

pdf-file(bad quality) (16MB)

pdf-file(bad quality) (16MB)

"Computer Graphics for Visualizing Simulation Results,"

by Eihachiro Nakamae, Hideo Yamashita, K. Harada, and Tomoyuki Nishita,

Abstract

Computer graphics techniques for visualizing the following simulation

results are

developed:

(1) lighting designs for different type sources such as point

sources, linear

sources, area sources, and polyhedron sources,

(2) shaded time at arbitrary positions

such as windows, walls, and even the inside of a room,

(3) montages for view environment evaluation,

(4) quasi-semi-transparent models for observing life

generation process in anatomy, and

(5) two and three dimensional magnetic fields analyzed by

the finite element method.

Additional information

pdf-file(mono color) (16.4MB)

pdf-file(mono color) (16.4MB)

Last update: 10 Sept. 2003